Apple’s latest Visual Intelligence feature brings powerful search capabilities to the iPhone 16 lineup. Visual Intelligence lets users identify objects, text, and places by simply pointing their iPhone camera at them, similar to Google Lens but deeply integrated with iOS 18.2’s camera system.

The feature works through Camera Control and requires Apple Intelligence to be enabled on the device. Users outside the EU can access this tool by long-pressing within the camera interface, making visual searches quick and intuitive.

Visual Intelligence transforms everyday objects into searchable content. From identifying plants on a desk to learning about landmarks while traveling, the iPhone 16’s camera now serves as a gateway to instant information about the world around us.

Apple Visual Intelligence on iPhone: The Complete Guide

Apple continues to blur the line between the physical and digital worlds — and with Visual Intelligence, your iPhone can now see, understand, and interact with the world around you.

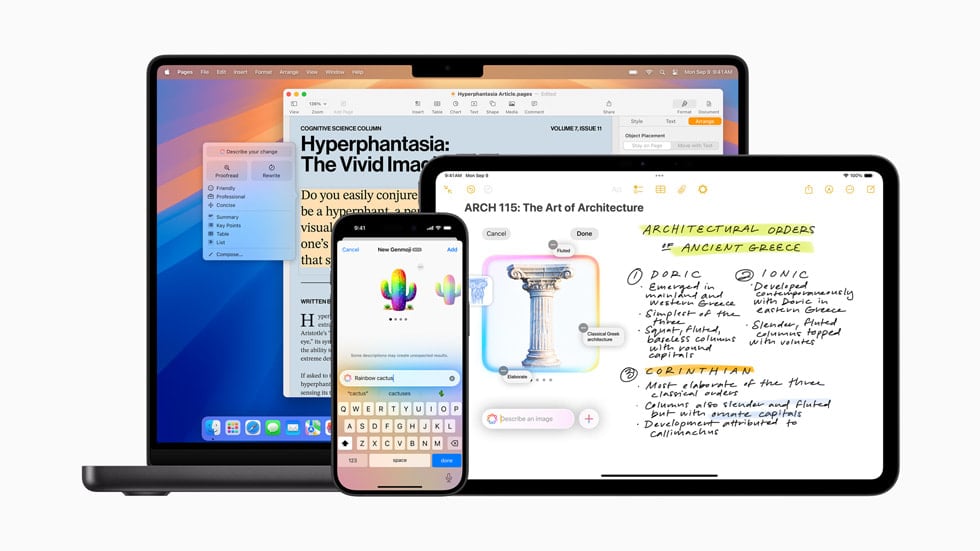

Introduced as part of Apple Intelligence in iOS 18 and expanded in iOS 18.2 and iOS 26, Visual Intelligence takes the familiar Visual Look Up feature to a whole new level. It uses on-device AI to identify objects, text, and scenes on your screen — and even lets you search or act on what you see instantly.

In this guide, we’ll cover what Apple Visual Intelligence is, how to use it, and the ways it’s transforming everyday iPhone use.

🤖 What Is Apple Visual Intelligence?

Apple Visual Intelligence is an AI-powered feature built into iOS that allows your iPhone to analyze images, text, and objects — both through the Camera and on-screen content — to provide smart, contextual results.

Think of it as Apple’s version of Google Lens or Circle to Search, but designed with privacy-first, on-device intelligence.

According to Apple Support, Visual Intelligence can:

- Identify plants, animals, landmarks, and products

- Extract and interact with text in photos or screenshots

- Suggest related actions, like shopping, searching, or translating

- Recognize on-screen content in apps for instant lookup

(source: Apple Support)

📱 How to Access Visual Intelligence on iPhone

Visual Intelligence is built directly into iOS, so you don’t need to download anything. You can use it in multiple ways:

1. From the Photos App

- Open the Photos app.

- Select an image.

- Look for the Visual Intelligence icon (a sparkle or “i” with stars).

- Tap it to see details about the object, location, or text in the photo.

For example, if you take a picture of a flower, your iPhone can identify the species and show similar images online.

2. From the Camera App

- Open the Camera and point it at an object.

- Tap the Visual Intelligence button when it appears.

- Instantly identify what’s in view — from a product to a landmark.

This is especially useful for travelers or shoppers who want quick information without typing a single word.

3. On-Screen Visual Intelligence

As of iOS 26, you can now use on-screen Visual Intelligence — Apple’s version of “Circle to Search.”

Here’s how:

- Press and hold the Home Indicator or Camera Control button.

- Circle or highlight any object, image, or text on your screen.

- Visual Intelligence will analyze it and show related results, links, or actions.

This works across apps — Safari, Messages, Instagram, or even screenshots — allowing instant lookup without switching apps.

(sources: Tom’s Guide, Wccftech)

4. Using Visual Intelligence with Text

Visual Intelligence can also detect text in images or on-screen content.

For example:

- Tap a phone number to call directly.

- Copy handwritten notes from a photo.

- Translate foreign text in real time.

This builds on Apple’s Live Text feature but now integrates deeper AI understanding.

🧠 What You Can Do with Apple Visual Intelligence

Here are some of the most useful things you can do with it:

| Use Case | Description |

|---|---|

| Identify Objects | Instantly recognize plants, animals, landmarks, and artwork. |

| Shop Smarter | Scan a product and find similar items online. |

| Translate Text | Translate signs, menus, or documents in real time. |

| Learn About Locations | Get details about monuments, restaurants, or travel spots. |

| Extract Information | Copy text, addresses, or phone numbers directly from images. |

| Search On-Screen Content | Highlight anything on your screen to get instant AI-powered insights. |

(source: iPhone Life)

🔒 Privacy and On-Device Processing

Unlike other AI systems that rely heavily on cloud servers, Apple emphasizes privacy. Visual Intelligence runs primarily on-device, meaning:

- Your data stays private.

- Images and text aren’t uploaded to Apple servers unless you explicitly share them.

- The system uses Apple Neural Engine for fast, secure processing.

This privacy-first approach sets Apple apart from competitors like Google Lens or Samsung’s Bixby Vision.

⚙️ Devices That Support Visual Intelligence

Visual Intelligence is available on:

- iPhone 15 and later

- iPhone 16 series (optimized for Apple Intelligence)

- Some features may extend to iPadOS 18.2 and macOS Sequoia with Continuity support.

(source: MacObserver)

💡 Tips for Getting the Most Out of Visual Intelligence

- Use good lighting for better object recognition.

- Update to the latest iOS to access new AI features.

- Try it across different apps — Safari, Messages, Notes, and Photos.

- Experiment with Live Text + Visual Intelligence for maximum productivity.

- Combine with Siri — ask Siri to act on what Visual Intelligence finds.

🔮 The Future of Apple Visual Intelligence

Apple is expected to expand Visual Intelligence as part of its broader Apple Intelligence ecosystem, adding:

- Deeper Siri integration (e.g., “What’s in this photo?”)

- AI-powered visual search in Safari

- Contextual suggestions based on what’s on your screen

- AR integration with Apple Vision Pro

As Apple continues merging AI, AR, and real-world context, Visual Intelligence could become one of the most powerful tools in the iPhone experience.

🏁 Final Thoughts

Apple Visual Intelligence represents a major leap in how we interact with our devices. By combining camera vision, on-screen recognition, and AI context, your iPhone becomes a true smart assistant — capable of understanding the world around you.

Whether you’re identifying a flower, translating a sign, or circling an image on your screen, Apple’s Visual Intelligence makes your iPhone smarter, faster, and more intuitive than ever.

Sources:

- Apple Support – Use Visual Intelligence on iPhone

- Tom’s Guide – How to Use Visual Intelligence on iPhone

- Wccftech – Circle to Search on iPhone with iOS 26

- iPhone Life – On-Screen Visual Intelligence

- MacObserver – Visual Intelligence on iPhone 16

How to Italicize on ChatGPT

To italicize text, use Markdown formatting:

italic text → italic text

bold text → bold text

How to Get on ChatGPT

Visit chat.openai.com or download the ChatGPT app. Create an account or log in using Google, Apple, or email credentials.

Key Takeaways

- Visual Intelligence works exclusively on iPhone 16 models running iOS 18.2 or later

- Users can activate the feature with a long press in the Camera Control interface

- The tool provides instant information about objects, text, and places through the iPhone camera

Explore Visual Intelligence on iPhone 16

Visual Intelligence transforms the iPhone 16’s camera into a powerful tool for object recognition, language translation, and smart interactions with the physical world through ChatGPT integration and advanced AI capabilities.

Understanding Visual Intelligence on iOS 18.2

The Visual Intelligence system activates when users press and hold the Camera Control button on iPhone 16 devices. This feature processes real-time visual data through multiple AI models, including Apple’s proprietary system and ChatGPT.

The system identifies objects, landmarks, and text in the camera’s view instantly. It provides detailed information about businesses, products, and points of interest within seconds.

Users can scan foreign language text for immediate translations. The system supports multiple languages and maintains high accuracy even with challenging fonts or angles.

Advanced Camera Controls and Usage

The Camera Control button serves as the primary gateway to Visual Intelligence features. A long press triggers the AI analysis mode, while a quick tap maintains traditional camera functions.

Visual Intelligence works in both photo and video modes. Users can pause the frame to analyze specific objects or scenes in greater detail.

The system automatically detects important elements like business cards, QR codes, and menus. It suggests relevant actions such as adding contacts, opening websites, or viewing full restaurant menus.

Seamless Integration with Siri and ChatGPT

Visual Intelligence combines Siri’s voice commands with ChatGPT’s analytical capabilities. Users can ask questions about objects in view and receive detailed explanations.

The system creates calendar events, reminders, and notes based on visual information. For example, photographing a concert poster can generate an event with all relevant details.

Integration with ChatGPT enables complex queries about objects and scenes. Users receive detailed analysis, historical context, and related information through natural conversation.

Smart suggestions appear based on recognized content. The system learns from user interactions to improve accuracy and relevance of recommendations.

Frequently Asked Questions

Visual Intelligence on iPhone brings powerful camera-based recognition features for identifying objects, text, and places using artificial intelligence technology built into iOS 18.2.

How do you use Visual Intelligence features on the iPhone 15 Pro?

Point the camera at objects, text, or scenes to start Visual Intelligence. The system recognizes elements automatically and displays relevant actions on screen.

Users can tap recognized text to copy, translate, or have it read aloud. For objects and places, tapping brings up information and details about what’s in view.

Why are Visual Intelligence capabilities exclusive to newer iPhone models?

The advanced AI processing requires the latest neural engine hardware found in iPhone 16 models. These devices contain specialized chips optimized for machine learning tasks.

The neural processors enable real-time analysis of camera input while maintaining privacy and security standards.

What steps are needed to activate Visual Intelligence on an iPhone?

Go to Settings and select Apple Intelligence & Siri. Enable Apple Intelligence features.

Make sure iOS 18.2 or later is installed. Visual Intelligence activates automatically when pointing the camera at supported content.

How can issues with Visual Intelligence on the iPhone be troubleshooted?

Check that iOS is updated to version 18.2 or newer. Restart the iPhone if features aren’t working properly.

Ensure adequate lighting for the camera to clearly capture subjects. Clean the camera lens if image quality seems poor.

What are the benefits of using Visual Intelligence on the iPhone?

Text can be instantly translated, summarized, or read aloud. Contact information like phone numbers and email addresses are automatically detected.

The system identifies objects and places, providing relevant details and information without manual searching.

What distinguishes Visual Intelligence on the iPhone 15 Pro Max from previous models?

The iPhone 15 Pro Max processes Visual Intelligence tasks with improved speed and accuracy compared to older devices.

Enhanced neural engine capabilities allow for more sophisticated real-time analysis of scenes and objects through the camera.